Custom Shader Effects

While Solar2D has an extensive list of built-in shader effects, there are times when you may need to create custom effects. This guide outlines how to create custom effects using custom shader code, structured in the same way that Solar2D’s

Writing custom effects is an advanced developer feature. If you want to take advantage of this feature, this guide assumes that you are already familiar with and fluent in GLSL ES

(OpenGL ES 2.0) .Custom effects are supported on iOS, Android, macOS desktop, and Win32 desktop.

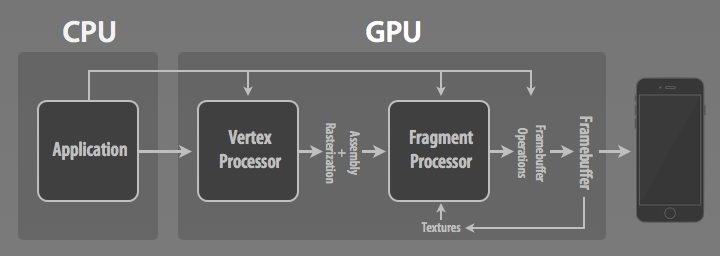

GPU Rendering Pipeline

In a programmable graphics pipeline, the GPU is treated as a stream processor. Data flows through multiple processing units and each unit is capable of running a (shader) program.

In OpenGL-ES 2.0, data flows from (1) the application to (2) the vertex processor to (3) the fragment processor and finally to (4) the framebuffer/screen.

In Solar2D, rather than write complete shader programs, custom shader effects are exposed in the form of vertex and fragment kernels which allow you to create powerful programmable effects.

Programmable Effects

Solar2D allows you to extend its pipeline to create several types of custom programmable effects, organized based on the number of input textures:

- Generators — Procedurally-generated effects which don’t operate on any textures/images.

- Filters — Effects which operate on a single texture/image (BitmapPaint).

- Composites — Effects which operate on two textures/images, combined together as a CompositePaint.

Creating Custom Effects

To define a new effect, call graphics.defineEffect(), passing in a Lua table which defines the effect. In order for this table to be a valid effect definition, it must contain several properties:

category— The type of effect.name— The name within a given category.vertexand/orfragment— Defines where your shader code goes, as described in the Defining Kernels section below.

A complete and detailed description of all properties is available in the graphics.defineEffect() documentation.

Naming Effects

The name of an effect is determined by the following properties:

category— The type of effect.group— The group of effect. If not provided, Solar2D will assumecustom.name— The name within a given category.

When you set an effect on a display object, you must provide a . as follows:

local effectName = "[category].[group].[name]"

Defining Kernels

Solar2D packages snippets of shader code in the form of kernels. By structuring specific vertex and fragment processing tasks in kernels, the creation of custom effects is dramatically simplified.

Essentially, a kernel is shader code which the main shader program relies upon to handle specific processing tasks. Solar2D supports both vertex kernels and fragment kernels. You must specify at least one kernel type in your effect (or both). If a vertex/fragment kernel is not specified, Solar2D inserts the default vertex/fragment kernel respectively.

Vertex Kernels

Vertex kernels operate on a per-vertex basis, enabling you to modify vertex positions before they are used in the next stage of the pipeline. They must define the following function which accepts an incoming position and can modify that vertex position.

Solar2D’s default vertex kernel simply returns the incoming position:

P_POSITION vec2 VertexKernel( P_POSITION vec2 position )

{

return position;

}

Time

The following time uniforms can be accessed by the vertex kernel:

P_DEFAULT float CoronaTotalTime— Running time of the app in seconds.P_DEFAULT float CoronaDeltaTime— Time in seconds since previous frame.

If you use these variables in your kernel’s shader code, your kernel is implicitly

When using these variables, you need to tell Solar2D that your shader requires the GPU to kernel.isTimeDependent property in your kernel definition as indicated below. Note that you should only set this if your shader code is truly

kernel.isTimeDependent = true

Size

The following size uniforms can be accessed by the vertex kernel:

P_POSITION vec2 CoronaContentScale— The number of content pixels per screen pixels along the x and y axes. Content pixels refer to Solar2D’s coordinate system and are determined by the content scaling settings for your project.P_UV vec4 CoronaTexelSize— These values help you understand normalized texture pixels (texels) as they relate to actual pixels. This is useful because texture coordinates are normalized( and normally you only have information about proportion (the percentage of the width or height of the texture). Effectively, these values help you create effects based on actual screen/content pixel distances.0to1)

| Value | Definition |

|---|---|

CoronaTexelSize.xy |

The number of texels per screen pixel along the x and y axes. |

CoronaTexelSize.zw |

The number of texels per content pixel along the x and y axes, initially the same as CoronaTexelSize.xy. This is useful in creating CoronaContentScale. |

Coordinate

P_UV vec2 CoronaTexCoord— The texture coordinate for the vertex.

Example

The following example causes the bottom edge of an image to wobble by a fixed amplitude:

local kernel = {}

-- "filter.custom.myWobble"

kernel.category = "filter"

kernel.name = "myWobble"

-- Shader code uses time environment variable CoronaTotalTime

kernel.isTimeDependent = true

kernel.vertex =

[[

P_POSITION vec2 VertexKernel( P_POSITION vec2 position )

{

P_POSITION float amplitude = 10;

position.y += sin( 3.0 * CoronaTotalTime + CoronaTexCoord.x ) * amplitude * CoronaTexCoord.y;

return position;

}

]]

Fragment Kernels

Fragment kernels operate on a per-pixel basis, enabling you to modify each pixel

Solar2D’s default fragment kernel simply samples a single texture (CoronaSampler0) and, using CoronaColorScale(), modulates it by the display object’s alpha/tint:

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

P_COLOR vec4 texColor = texture2D( CoronaSampler0, texCoord );

return CoronaColorScale( texColor );

}

Time

The same vertex kernel time uniforms can be accessed by the fragment kernel.

Size

The same vertex kernel size uniforms can be accessed by the fragment kernel.

Samplers

P_COLOR sampler2D CoronaSampler0— The texture sampler for the first texture.P_COLOR sampler2D CoronaSampler1— The texture sampler for the second texture (requires a composite paint).

Alpha/Tint

All display objects have an alpha property. In addition, shape objects have a tint which is set either via object:setFillColor() or the color channel properties

Generally, your shader should incorporate the effect of these properties into the color that your fragment kernel returns. You can do this by calling the following function to calculate the correct color:

P_COLOR vec4 CoronaColorScale( P_COLOR vec4 color );

This function takes an input color vector (red, green, blue, and alpha channels) and returns a color vector modulated by the display object’s tint and alpha, as shown in the fragment kernel examples. Generally, you should call this function at the end of the fragment kernel so that you can properly calculate the color vector your fragment kernel should return.

Example

The following example brightens an image by a fixed amount per color component:

local kernel = {}

-- "filter.custom.myBrighten"

kernel.category = "filter"

kernel.name = "myBrighten"

kernel.fragment =

[[

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

P_COLOR float brightness = 0.5;

P_COLOR vec4 texColor = texture2D( CoronaSampler0, texCoord );

// Pre-multiply the alpha to brightness

brightness = brightness * texColor.a;

// Add the brightness

texColor.rgb += brightness;

// Modulate by the display object's combined alpha/tint.

return CoronaColorScale( texColor );

}

]]

Time Transforms

In the case of time-dependent vertex or fragment kernels, Solar2D will also look for timeTransform. If this exists, it must be a table with one of the following as its func member: "modulo", "pingpong", "sine". The value of CoronaTotalTime within the shader will be the result of any such transformation, rather than the raw underlying time.

The "modulo" transform is computed as CoronaTotalTime = OriginalTotalTime % range, where range is a positive number that may be supplied in the timeTransform table under that same key. By default, range is 1.

The "pingpong" transform is similar, except CoronaTotalTime will first go from 0 to range (no default, in this case), then fall back to 0, and repeat indefinitely.

The "sine" transform is computed as CoronaTotalTime = amplitude * sin(scale * OriginalTotalTime + phase). Again, amplitude and phase may be provided in the timeTransform table, with defaults 1 and 0 respectively. The scale is calculated from a period parameter, a positive number indicating how much time should pass before the sine wave repeats. The default is 2 * π, corresponding to a scale factor of 1.

graphics.defineEffect{

category = "generator", group = "time", name = "pingpong",

isTimeDependent = true, timeTransform = { func = "pingpong", range = 5 },

fragment = [[

P_COLOR vec4 FragmentKernel (P_UV vec2 _)

{

return vec4(0., CoronaTotalTime / 5., 0., 1.);

}

]]

}

local rect = display.newRect(300, 100, 50, 50)

rect.fill.effect = "generator.time.pingpong"

See the precision issues below for the motivation behind these transforms.

Custom Varying Variables

A “varying” variable enables data to be passed from a vertex shader to the fragment shader. The vertex shader outputs this value which corresponds to the positions of the primitive’s vertices. In turn, the fragment shader linearly interpolates this value across the primitive during rasterization.

In Solar2D, you can declare your own varying variables in the shader code. You should put them at the beginning of both your vertex and fragment code.

Example

The following example combines the wobble vertex and brighten fragment kernels. Unlike the "myBrighten" fragment example above, this version does not use a fixed value for brightness. Instead, the vertex shader calculates an oscillating brightness value for each vertex and the fragment shader linearly interpolates the brightness value according to the pixel it’s shading.

local kernel = {}

kernel.category = "filter"

kernel.name = "wobbleAndBrighten"

-- Shader code uses time environment variable CoronaTotalTime

kernel.isTimeDependent = true

kernel.vertex =

[[

varying P_COLOR float delta; // Custom varying variable

P_POSITION vec2 VertexKernel( P_POSITION vec2 position )

{

P_POSITION float amplitude = 10;

position.y += sin( 3.0 * CoronaTotalTime + CoronaTexCoord.x ) * amplitude * CoronaTexCoord.y;

// Calculate value for varying

delta = 0.4*(CoronaTexCoord.y + sin( 3.0 * CoronaTotalTime + 2.0 * CoronaTexCoord.x ));

return position;

}

]]

kernel.fragment =

[[

varying P_COLOR float delta; // Matches declaration in vertex shader

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

// Brightness changes based on interpolated value of custom varying variable

P_COLOR float brightness = delta;

P_COLOR vec4 texColor = texture2D( CoronaSampler0, texCoord );

// Pre-multiply the alpha to brightness

brightness *= texColor.a;

// Add the brightness

texColor.rgb += brightness;

// Modulate by the display object's combined alpha/tint.

return CoronaColorScale( texColor );

}

]]

Effect Parameters

In Solar2D, you can pass effect parameters by setting appropriate properties on the effect of a ShapeObject. These properties depend on the effect. For example, the intensity parameter that can be propagated to the shader code:

object.fill.effect = "filter.brightness" object.fill.effect.intensity = 0.4

Solar2D supports two methods for adding parameters to custom shader effects. These are mutually exclusive, so you must choose one or the other.

| Method | Description |

|---|---|

| vertex userdata | Parameters are passed on a |

| uniform userdata | Parameters are passed as uniforms. This is for effects which require more parameters than can be passed via vertex userdata. |

On devices, OpenGL performs best when you are able to minimize state changes. This is because multiple objects can be batched into a single draw call if there are no state changes required between display objects.

Typically, it’s best to use vertex userdata when you need to pass in effect parameters, because the parameter data can be passed in a vertex array. This maximizes the chance that Solar2D can batch draw calls together. This is especially true if you have numerous consecutive display objects with the same effect applied.

Vertex Userdata

When using vertex userdata to pass effect parameters, the effect parameters are copied for each vertex. To minimize the data size impact, the effect parameters are limited to a vec4

P_DEFAULT vec4 CoronaVertexUserData

For example, suppose you want to modify the above "filter.custom.myBrighten" effect example so that, in Lua, there is a "brightness" parameter for the effect:

object.fill.effect = "filter.custom.myBrighten" object.fill.effect.brightness = 0.3

To accomplish this, you must instruct Solar2D to map the parameter name in Lua with the corresponding component in the vector returned by CoronaVertexUserData. The following code tells Solar2D that the "brightness" parameter is the first component index = 0)CoronaVertexUserData vector.

kernel.vertexData =

{

{

name = "brightness",

default = 0,

min = 0,

max = 1,

index = 0, -- This corresponds to "CoronaVertexUserData.x"

},

}

In the above array (kernel.vertexData), each element is a table and each table specifies:

name— The string name for the parameter exposed in Lua.default— The default value.min— The minimum value.max— The maximum value.index— The index for the corresponding vector component inCoronaVertexUserData:index = 0→CoronaVertexUserData.x

index = 1→CoronaVertexUserData.y

index = 2→CoronaVertexUserData.z

index = 3→CoronaVertexUserData.w

Finally, modify the FragmentKernel to read the parameter value, accessing the parameter value via CoronaVertexUserData:

kernel.fragment =

[[

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

P_COLOR float brightness = CoronaVertexUserData.x;

...

}

]]

Uniform Userdata

(forthcoming feature)

Vertex Textures

If the device supports it, you can also sample textures in the vertex kernel. On the Solar2D side, calling system.getInfo(“maxVertexTextureUnits”) will give you the number of available samplers as the return value. This is also available in the vertex kernel as gl_MaxVertexTextureImageUnits.

Any texture meant for use by vertex kernels should be created with both sampling filters set to "nearest" and use level-of-detail (Lod) sampling. You can declare samplers in the vertex code manually as uniform sampler2D NAME, where NAME is some unused name of your choosing. (TODO: with more than one, the declaration order probably maps to sampler order, but this needs confirmation)

kernel.vertex =

[[

uniform sampler2D us_Vertices; // vertex sampler #1

P_POSITION vec2 VertexKernel (P_POSITION vec2 pos)

{

if (gl_MaxVertexTextureImageUnits > 0)

{

...

P_COLOR vec4 verts = texture2DLod(us_Vertices, vec2(offset, 0.), 0.);

...

}

else return vec4(0.);

}

]]

GLSL Conventions and Best Practices

GLSL has many flavors across mobile and desktop. Solar2D assumes the use of GLSL ES

Solar2D Simulator vs Device

Performance

Shader performance on desktop GPUs will not be the same as on devices. Therefore, if you run your shader under the Solar2D Simulator or in the Solar2D Shader Playground, you should run it on actual devices to be sure that you are getting the desired performance.

Also note that if you are supporting devices from different manufacturers, the performance between them could vary significantly. On Android in particular, some

Syntax

The Solar2D Simulator compiles your shader using desktop GLSL. Consequently, if you run your shader in the Solar2D Simulator, your shader may still contain GLSL ES errors that will not appear until you attempt to run your shader on a device.

If you have a fragment-only kernel shader effect, you can test out your shader code in the Solar2D Shader Playground. This playground verifies against GLSL ES in a

Precision Qualifier Macros

Unlike other flavors of GLSL, GLSL ES

Instead of using raw precision qualifiers like lowp, you should use one of the following precision qualifier macros. The defaults are optimized for the type of data:

P_DEFAULT— For generic values; default ishighp.P_RANDOM— For random values; default ishighp.P_POSITION— For positions; default ismediump.P_NORMAL— For normals; default ismediump.P_UV— For texture coordinates; default ismediump.P_COLOR— For pixel colors; default islowp.

We strongly recommend you use Solar2D’s defaults for shader precision, all of which have been optimized to balance performance and fidelity. However, your project can override these settings in config.lua (guide).

High-Precision Devices

Not all devices support high precision. Therefore, if your kernel requires high precision, you should use the GL_FRAGMENT_PRECISION_HIGH macro. This is 1 if high precision is supported on the device, or undefined otherwise.

If the device does not support highp, your kernel can gracefully degrade by writing two implementations:

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

#ifdef GL_FRAGMENT_PRECISION_HIGH

// Code path for high precision calculations

#else

// Code path for fallback

#endif

}

Pre-Multiplied Alpha

Solar2D provides textures with pre-multiplied alpha. Therefore, you may need to divide by the alpha to recover the original RGB values. However, for performance reasons, you should try to perform calculations to avoid the divide. Compare the following two kernels that brighten an image:

- In the following, the original RGB values are recovered by undoing the

pre-multiplied alpha, and later, the alpha isre-applied . This is not ideal because it generates a lot of additional operations on the GPU for every pixel.

// Non-optimal Version

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

P_COLOR float brightness = 0.5;

P_COLOR vec4 texColor = texture2D( CoronaSampler0, texCoord );

// BAD: Recover original RGBs via divide

texColor.rgb /= texColor.a;

// Add the brightness

texColor.rgb += brightness;

// BAD: Re-apply the pre-multiplied alpha

texColor.rgb *= texColor.a;

return CoronaColorScale( texColor );

}

- This version pre-multiplies the alpha of the texture to the

brightnessvariable so that it can be added directly to the texture’s RGB values. This circumvents the deficiencies of the above implementation.

// Optimal Version

P_COLOR vec4 FragmentKernel( P_UV vec2 texCoord )

{

P_COLOR float brightness = 0.5;

P_COLOR vec4 texColor = texture2D( CoronaSampler0, texCoord );

// GOOD: Pre-multiply the alpha to brightness

brightness = brightness * texColor.a;

// Add the brightness

texColor.rgb += brightness;

return CoronaColorScale( texColor );

}

Vector Calculations

Some devices do not have GPUs with vector processors. In those cases, vector calculations may be performed on a scalar processor. Generally, you should carefully consider the order of operations in your shader to ensure that unnecessary calculations can be avoided on a scalar processor.

Consolidate Scalar Calculations

In the following example, a vector processor would execute each multiplication in parallel. However, because of the order of operations, a scalar processor would perform 8 multiplications, even though only one of the three parameters is a scalar value.

P_DEFAULT float f0, f1; P_DEFAULT vec4 v0, v1; v0 = (v1 * f0) * f1; // BAD: Multiply each scalar to a vector

A better ordering would be to multiply the two scalars first, then multiply the result against the vector. This reduces the calculation to 5 multiplies.

highp float f0, f1; highp vec4 v0, v1; v0 = v1 * (f0 * f1); // GOOD: Multiply scalars first

Use Write Masks

Similar logic applies when your vector calculation does not use all components. A “write mask” allows you to limit the calculations to only the components specified in the mask. The following runs twice as fast on a scalar processor because the write mask is used to specify that only two of the four components are needed.

highp vec4 v0; highp vec4 v1; highp vec4 v2; v2.xz = v0 * v1; // GOOD: Write mask limits calculations

Avoid Dynamic Texture Lookups

When a fragment shader samples textures at a location different from the texture coordinate passed to the shader, it causes a dynamic texture lookup, also known as

In contrast, effects that have no dependent texture reads enable the GPU to

Avoid Branching and Loops

Branching instructions (if conditions) are expensive. When possible, for loops should be unrolled or replaced by vector operations.

Precision Issues

Solar2D’s shaders use IEEE-754 floats as the underlying representation for numbers.

In the majority of cases—the exceptions being irrelevant here—part of a floating-point number specifies an integer numerator. Let’s call this N. Our numerator can go from 0 to D - 1, where D is a fixed power of 2. Together these give us a scale factor t = N / D in the range [0, 1).

The rest of the number is devoted to the sign (positive or negative) and an exponent, the latter being another integer that gives us a power of 2, for instance 2-3 or 25.

We decode our numbers by interpolating between neighboring powers, with exponents p and p + 1, using the scale factor: result = 2^p * (1 + t). Notice that, if t were 1, we would be on the next power of 2.

This can exactly represent some values, but will only approximate most. Any format is going to have tradeoffs. IEEE-754 offers considerable accuracy near 0, as well as exact integers all the way up to 2 * D.

On the CPU side of things, Lua gives us 64-bit floats, with rather generous 52-bit numerators. On GPUs we are rarely so lucky, especially on mobile hardware, owing to concerns like bandwidth and memory.

For instance, see the “Qualifiers” section in the OpenGL ES 2.0 reference card. With mediump our D is only guaranteed to be an underwhelming 1024.

Now imagine what this means for time, measured in seconds. At first, we’ll be totally fine. But just after the two-minute mark, interpolating between 128 and 256, we can only take steps of (256 - 128) / 1024, or 1/8th of a second. At five minutes we’ll proceed in increments of 1/4, and so on. Anything relying on such results becomes quite choppy.

This scenario is gloomier than it needs to be, however. The time is actually maintained in Solar2D as a single-precision float, with a respectable 23-bit numerator; the loss comes after it makes its way to the GPU. Furthermore, many shaders want transformed results, something like TrueTotalTime % X or sin(N * TrueTotalTime), whose absolute values are likely to be in the more precise lower numeric ranges. Time transforms let us do some of the more common possibilities on the Solar2D side and pass the nicer results along.